The Role of AI in Audiology

From the Labs to the Clinics

Renowned auditory researcher Dr. Robert Harrison brings us up to date on information and research from the Labs. Appropriately titled “From the Labs to the Clinics”, Bob is involved in laboratory and applied/clinical research, including evoked potential and otoacoustic emission studies and behavioural studies of speech and language development in children with cochlear implants. For a little insight into Bob’s interests outside the lab and the clinic, we invite you to climb aboard Bob’s Garden Railway.

Artificial Intelligence (AI) is back in the spotlight with the news that Geoffrey Hinton (Professor Emeritus, University of Toronto) was just awarded the 2024 Nobel Prize in Physics, for his role as the “grandfather of AI”. It is a well-deserved reward for his ground-breaking academic studies. On this occasion, what could be more appropriate than a commentary on the role of AI in audiology?

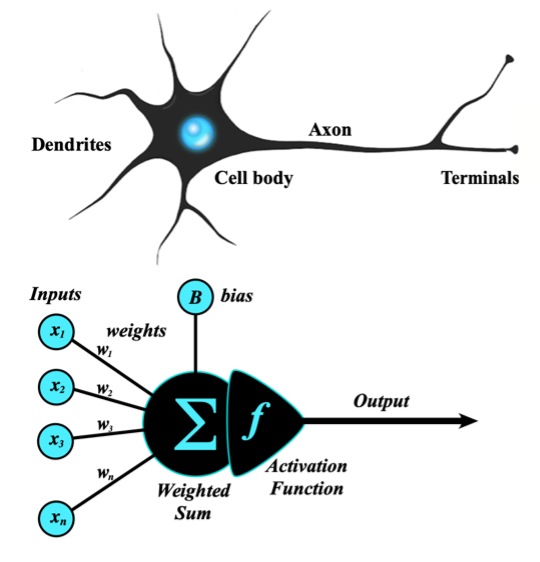

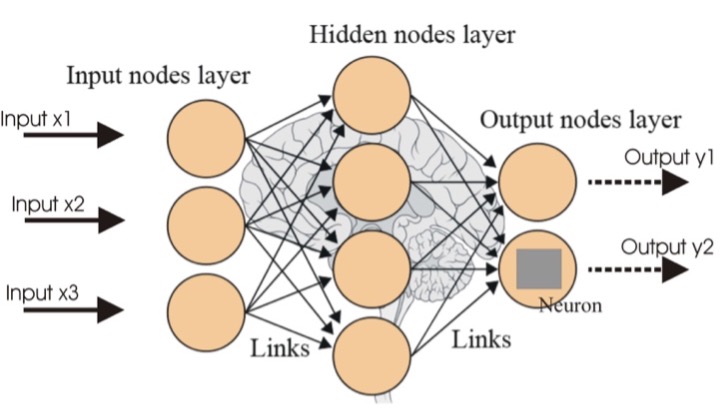

Prof Hinton’s early ideas started from modelling neuron activity

AI is increasingly important in audiology by enhancing diagnostic processes, treatment options, and overall patient care. Some of the key applications of AI in audiology include:

Hearing Loss Detection and Diagnosis

Automated Audiometry: AI-powered systems can perform hearing tests and diagnose hearing loss more accurately and efficiently. These systems can provide consistent results without needing a trained audiologist, which can be especially useful in remote or underserved areas.

Early Detection of Hearing Loss: AI algorithms are increasingly being used to analyze large datasets from patient records, identifying subtle patterns and providing early warnings of hearing loss that may not be apparent through traditional testing methods.

Hearing Aid Technology

Adaptive Hearing Aids: AI is being used to develop more intelligent hearing aids that automatically adjust to different environments. These devices can use machine learning to analyze the user’s surroundings and adapt settings to optimize speech recognition and noise reduction.

Personalized Sound Processing: AI algorithms can process auditory input more effectively, personalizing the amplification and filtering of sound based on the user’s unique hearing profile.

Cochlear Implants

Improved Sound Processing: AI is enhancing the performance of cochlear implants by improving how sound is processed and delivered to users. Machine learning can be used to adjust stimulation patterns and improve the quality of sound perception for those with cochlear implants.

Speech Recognition: AI algorithms are helping cochlear implant users by improving speech recognition in noisy environments, a common challenge for users of such devices.

Tinnitus Management

Tinnitus Sound Therapy: AI is being employed to develop sound therapy apps for tinnitus management. These apps can generate customized sounds designed to help mask or reduce the perception of tinnitus based on an individual’s specific symptoms.

Predictive Modeling: AI can also analyze data from tinnitus sufferers to predict the success of various treatment approaches and offer personalized treatment plans.

Tele-audiology:

Remote Diagnostics and Monitoring: AI is facilitating tele-audiology services by enabling remote diagnostics and hearing aid adjustments. Patients can undergo hearing assessments and receive real-time feedback from audiologists without visiting a clinic.

Patient Monitoring: AI systems can track patient data and provide real-time feedback on hearing aid performance, offering alerts for any issues or suggesting optimizations. This is especially helpful for ongoing care and management of hearing devices.

Speech and Language Processing

Enhanced Speech Recognition: AI is improving speech recognition technologies, making it easier for those with hearing impairments to communicate using speech-to-text applications. This technology is critical for improving accessibility and communication for those with hearing challenges.

Real-time Captioning: AI-driven speech recognition can be used for live captioning during conversations, which helps individuals with hearing impairments participate more fully in social interactions and events.

AI-based Auditory Training

Auditory Rehabilitation: AI is being used to create personalized auditory training programs that help individuals improve their listening skills, especially after receiving a hearing aid or cochlear implant. These programs can adapt to a user’s progress and adjust difficulty levels accordingly.

Data Analysis and Research

Predictive Analytics: AI can analyze patient data to predict outcomes, identify trends, and provide personalized treatment recommendations. This helps audiologists make more informed decisions and tailor interventions to each patient's specific needs.

Genomic Research: AI can be applied in genetic studies to identify links between genetics and hearing loss, leading to an improved understanding of hereditary hearing conditions and potential treatments.

In summary, AI has the potential to revolutionize audiology by improving diagnostic accuracy, enhancing hearing device technology, and offering personalized treatment options. By automating processes and improving the precision of interventions, AI is transforming how hearing loss is diagnosed and managed.

As a further demonstration of the value of AI in audiology, I should acknowledge that much of the above review was generated by AI algorithms (ChatGPT).