aVOR: We Walk You Through an Educational Tool with the Power to Help You Understand the Vestibulo-Ocular Reflex

Semicircular canals. Otoliths. If these terms deflate your confidence or fill you with a sense of dread, you are not alone. An understanding of vestibular structures in the inner ear and their function is unquestionably beneficial when you are engaged in vestibular practice but these mechanics are also notoriously difficult to grasp. Some people have a knack for imagining a 3-D rendering of a structure that is pictured only in 2-D but the rest of us are left squinting hopelessly at the available images. To further add to the confusion, it is common for textbook entries on the subject to forego accurate explanations in favour of gross over-simplifications. All of this can feel like an insurmountable challenge.

This is an article for anyone who has ever grappled with this challenge. It’s for anyone who has nodded along in faux-comprehension when the topic is raised, silently hoping that no one will discover this gap in their knowledge. As it turns out, there’s an app that will help you to feel like an expert in no time!

aVOR: An Educational Tool

The aVOR app (available for free, Apple App Store) is an interactive educational tool that was developed to help users gain a better understanding of how the vestibular system functions, specifically the angular vestibulo-ocular reflex (aVOR). You might recall that the vestibulo-ocular reflex is what allows us to keep our visual field in focus automatically, even during fast head rotations. When the VOR is working correctly, head movements are accompanied by equal and opposite eye movements.1 The general term VOR usually refers to angular or rotational-type movements (aVOR), though our vestibular system can also generate compensatory eye movements for translational or linear movements (tVOR).

A more in-depth look at the VOR and the video head impulse test (vHIT) has been covered previously in Striking the Right Balance.

The aVOR app was developed at the University of Sydney, School of Psychology. The app illustrates how the aVOR works by taking advantage of sensors embedded in most smart phones that detect rotation and movement.

For all of its educational value, the aVOR app can be somewhat challenging to navigate. Our aim for this article is to provide some orientation so that potential users can jump right in to what is an excellent learning opportunity.

aVOR Navigation and Manipulation

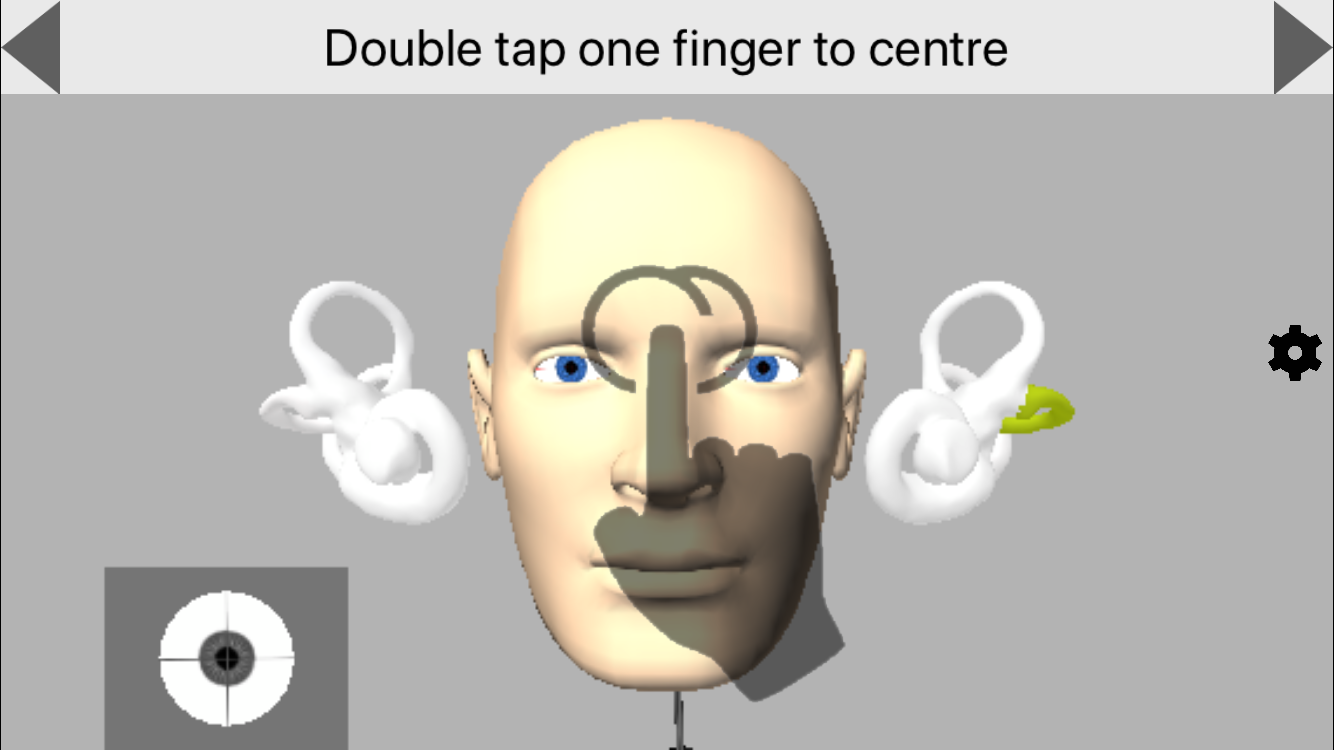

It’s a good thing that when you open the aVOR app you are taught how to manipulate each screen. The first few screens walk you through how to manipulate the head and eyes of the on-screen model. One finger held down on the screen allows you to rotate the head in all planes of movement and re-fixate the eyes in a frontwards direction (in relation to the head position). Two fingers on the screen will move the head away from centre. You can pinch 2 fingers to zoom in and out and if you double click on the screen it will re-centre the head and eyes to the frontward direction. In the first image (below), you will note that the on-screen model’s left lateral canal is shown in a yellow-green colour as a way of illustrating that the canal is dysfunctional: more on that later!

Figure 1: Introductory screens walk users through the app navigation (e.g. double tap with one finger to centre the image).

What you will notice next (Figure 2 link) is that, when the head is manually rotated in any direction through the app, the semicircular canals light up in red or blue. This shows you which canals are activated (red) and inhibited (blue) and is shown on all screens throughout the app.

Figure 2: Activation (red) and inhibition (blue) is visible during movement.

At any time, if you get lost in the app, click on the gears button on the right side of the screen. This brings you to settings where you can navigate back to the desired screen using the icons at the bottom of the page.

Now that we understand how to manipulate the screen, it’s time for the real fun!

aVOR Options

The aVOR app has several different display screens that you can toggle between:

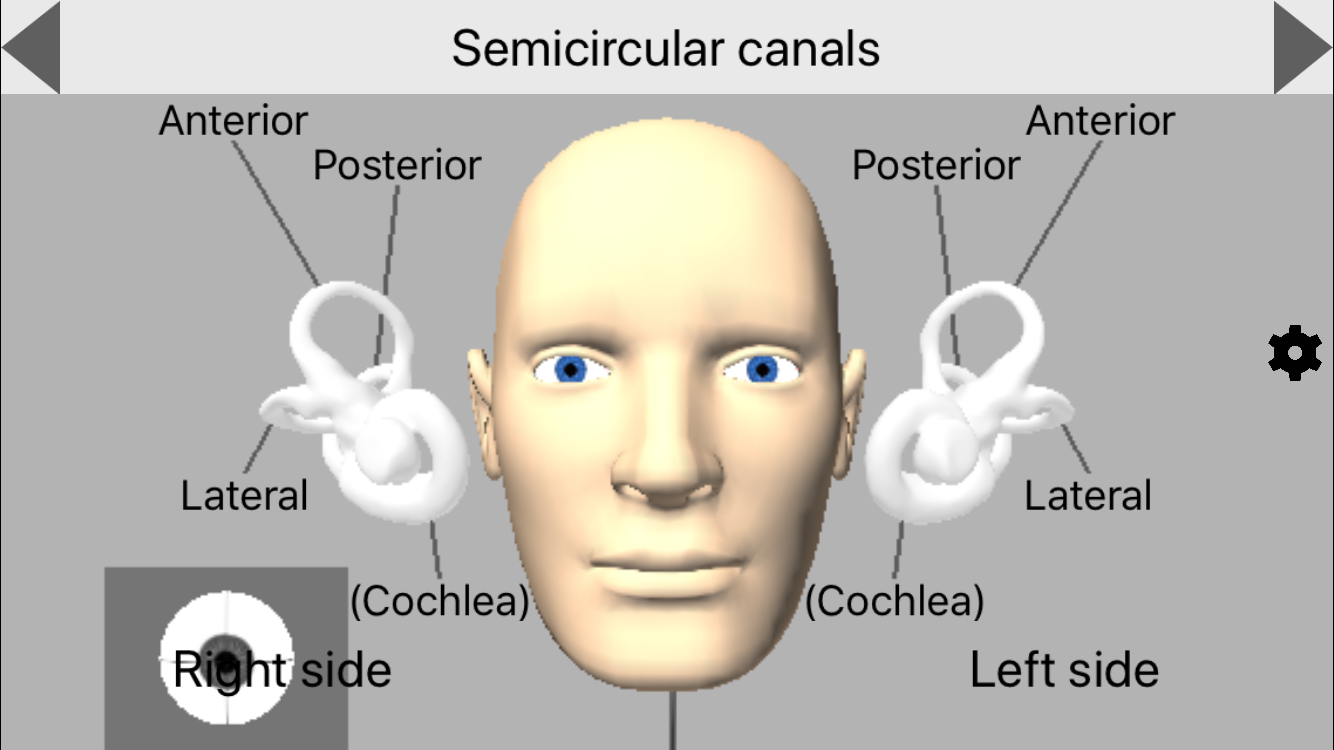

Semicircular Canals

This screen (Figure 3) labels the semicircular canals so that it is possible to see which way the canals are oriented with different head movements.

Figure 3. Semicircular Canals.

Actual Size of the Labyrinths

This screen (Figure 4) does exactly what it says – it shows the actual size of the labyrinths in relation to the head.

Figure 4. Actual size of labyrinths.

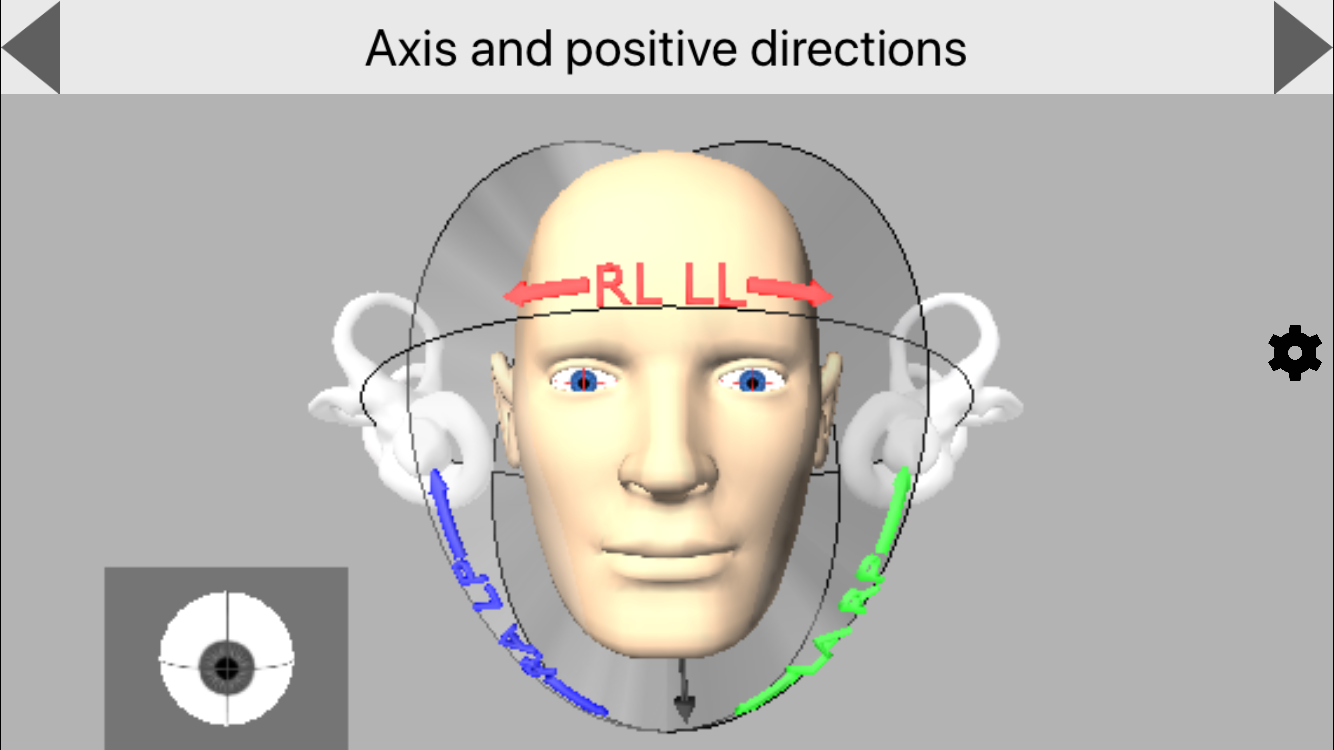

Axis and Positive Directions

This screen (Figure 5) shows which paired semicircular canals are activated or inhibited for all planes of movement (lateral and vertical canal (LARP/RALP) testing). See if you can turn the head in the appropriate manor to only activate the left anterior and right posterior canals. It’s difficult!

Figure 5. Axis and positive directions: main screen.

You can also turn the head to a bird’s eye view (which is more similar to a clinician point of view) and see which way the head has to be moved to get clean LARP and RALP head impulses without artifact from other canals (Figure 6).

Figure 6. Axis and positive directions after manipulating screen for a bird’s eye view.

Responses to Angular Velocity

This screen allows the user to view the activation and inhibition of the semicircular canals, as well as the resultant eye movements, as rotations of the head are animated in the different planes (Figure 7).

Figure 7. Responses to angular velocity.

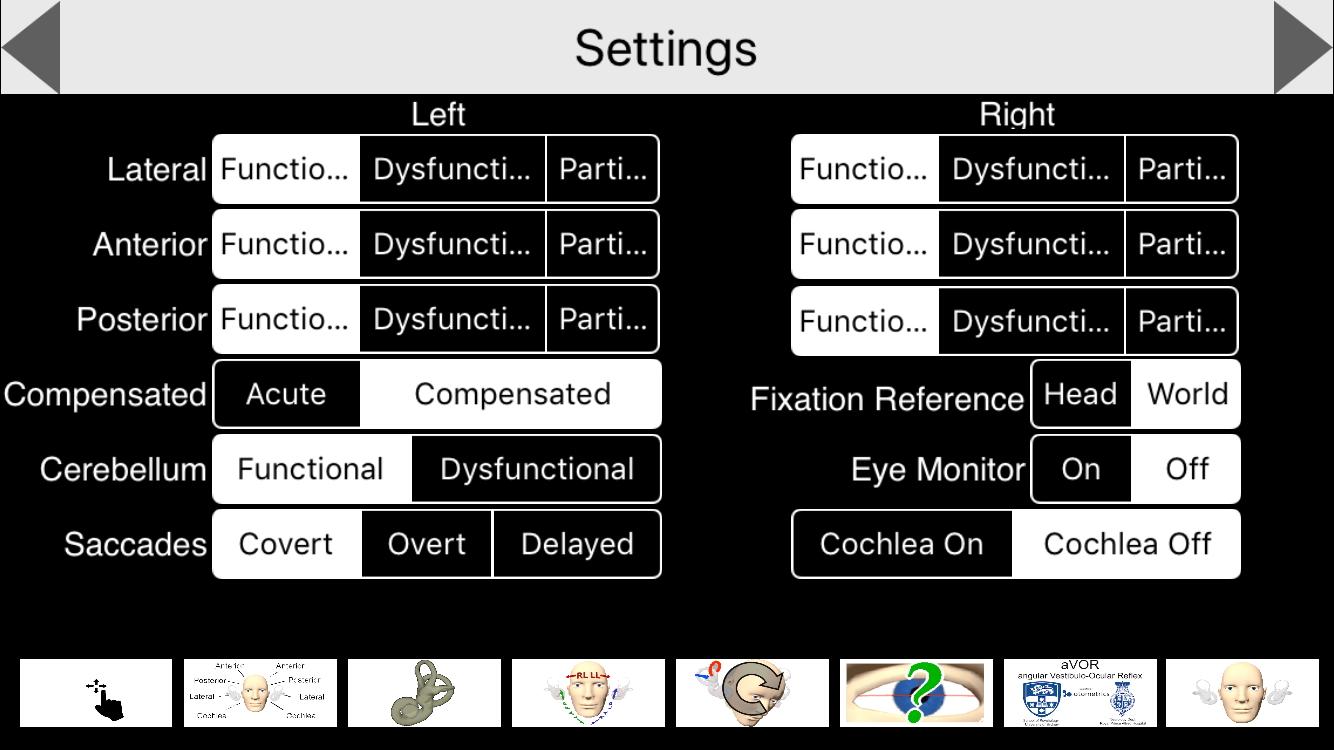

Settings and Learning Dysfunction

At this point, your head is probably spinning (pun intended) from trying to keep track of all of the different features we’ve mentioned so far. As it turns out, we’ve barely scratched the surface of what this app offers: the magic lies in the ability to use the settings screen to simulate a number of relevant pathologies (Figure 8).

Figure 8. The settings screen can be used to toggle between the different screens or select a desired pathology to interact with.

Using the aVOR app, you are able to create dysfunction in any of the semicircular canals and the cerebellum and choose whether or not the dysfunction is compensated. You can also choose what types of corrective saccades are present with the dysfunction (covert saccades, overt saccades and delayed overt saccades). You can even select the “question mark” option, which auto-generates a random pathology for you to interact with. As previously mentioned, canals that have been selected to be dysfunctional are shown in yellow-green (Figure 9).

Figure 9. Observing eye movements from an auto-generated pathology.

Using the Fixation Reference you can change the fixation of the head. Either the head is fixed and the world is moving (i.e. a person sitting on a train and looking at the world go by) or the world is fixed and the head is moving (i.e., a vHIT test where the head is moved side to side as the patient looks at a stationary dot). For the purposes of VOR testing and learning the corrective eye movements, it’s best to stay in the world fixation view.

Lastly, the Eye Monitoring setting allows you to choose if you want to include a close-up view of the eye during head movement. This will help you to see the compensatory eye movements that result from the dysfunction.

In addition to the irregular eye movements that are typically noted from VOR dysfunction, the settings screen also has a “particle” option for each of the semicircular canals. This is an exciting option for anyone who has struggled to visualize what is happening during a particle repositioning maneuver. With a bit of practice, it is possible to approximate the steps of a particle repositioning maneuver and observe the movement of the particles and the resultant eye movements. Note: this feature simulates canalithiasis only (Figure 10).

Figure 10. Repositioning of right anterior canal and resultant eye movement.

Pros and Cons

Pros

All in all, this is a great app to help you learn the different orientation of the semicircular canals, to understand how to stimulate the correct planes for lateral and vertical vHIT, and to visualize how repositioning works for BPPV (canalithiasis variants only). This app allows you to see what to expect with an acute uncompensated lesion and also demonstrates how difficult it is to see covert saccades with the naked eye.

Cons

Unfortunately, the aVOR app still has some limitations when it comes to using it as a training tool. We found that attempting to simulate a bedside head impulse test was not forgiving in how it displayed the resultant eye movements for certain patterns of dysfunction. For example, a lateral-only unilateral loss would also show significant torsional corrections in the vertical planes unless the gaze angles and planes of stimulation were oriented perfectly – no easy feat for beginners and something that could easily become a source of confusion. Also, as great as the particle feature is, it is challenging to replicate the desired movements fluidly enough to truly simulate a particle repositioning maneuver. However, even the ability to approximate the maneuvers is very useful, especially for those who have already been trained to do the maneuvers on human subjects but simply need help visualizing the movement of the particles.

Overall

aVOR is a unique app that has the flexibility and breadth of features to be more than worthy of occupying space on the iPhones of vestibular audiologists. There may also be a place for this app as a tool for patient education, though this would have to be done carefully as the many features of the app can be visually distracting.

Reference

- Roy FD and Tomlinson RD. Characterization of the vestibulo-ocular reflex evoked by high-velocity movements. Laryngoscope 2004;114:1190–93