How Vision Influences Speech Understanding in Age-Related Hearing Loss

Patricia V. Aguiar & Brandon T. Paul

Department of Psychology, Toronto Metropolitan University

Everyday speech communication typically involves a combination of auditory and visual modalities – people listen to a talker and can see their lip and facial movements as they speak. Visual cues are especially beneficial for speech understanding in older adults with hearing loss, which may help them compensate for age-related decline (Hallam & Corney, 2014). It is unclear how visual speech affords these benefits, and answering this question has implications for interventions that improve speech communication in this population. For example, older adults with hearing loss who use hearing aids often have difficulty understanding speech-in-noise, but rehabilitation programs that encourage visual cues may remediate these challenges (Bernstein et al., 2022a). Here we review two lines of evidence that address how visual functioning could be altered with age-related hearing loss. First, we consider studies that demonstrate enhanced visual processing or audio-visual integration. Second, we consider neuroplastic brain changes that arise after hearing loss that could modify how visual information is processed.

Audio-Visual Integration

Visual speech cues aid auditory speech through audio-visual integration, or how the brain combines information from sight and sound. A basic question is whether older adults are better integrators of auditory and visual speech or if they integrate these signals differently to compensate for their hearing loss. There is extensive literature on audio-visual integration and aging, including many studies finding stronger integration effects in older adults (e.g., see review by Pepper & Slade, 2023). A major issue with concluding this research is that hearing and visual acuity are often not measured in participants, affecting almost half of studies (Basharat et al., 2022). Without assessment of sensory acuity, it is difficult to determine if integration effects arise specifically as a consequence of sensory decline or because of widespread changes in cognition or perception due to aging. In addition, hearing acuity is measured using different methods (self-report or audiometry) or different calculations (better ear pure-tone average below 4 kHz, or PTA across higher frequencies), limiting conclusions that can be drawn across all studies. So, while it is plausible that audio-visual integration is enhanced in age-related hearing loss, firm conclusions are tempered by mixed findings and inconsistent methodologies.

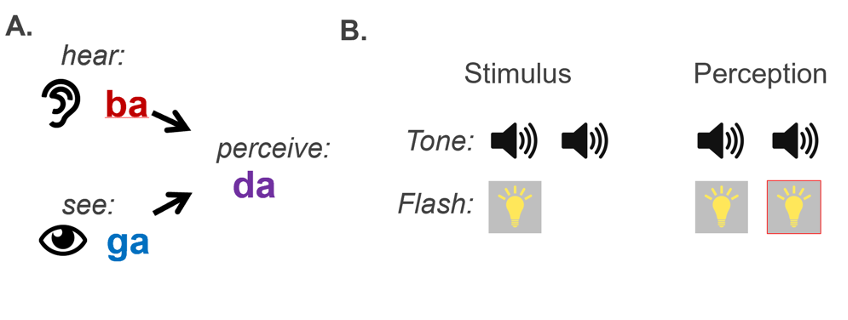

Studies designed to investigate age-related hearing loss directly suggest that audio-visual integration occurs differently, where integration could be biased toward the sense of vision as compensation. The McGurk effect is one classic form of audio-visual integration, which occurs when a person experiences an illusory speech utterance from conflicting visual and auditory speech signals (McGurk & MacDonald, 1976). As an example in Figure 1, presenting an auditory phoneme /ba/ aligned with a video of a person speaking the phoneme /ga/ sometimes yields the illusory perception of /da/, a fusion of the audio and visual phonemes. Whether people perceive the auditory or visual phoneme, or a fusion of the two, can inform whether they weigh one sensory mode more heavily over another. In support of a bias toward visual input, people with age-related hearing loss, as well as cochlear implant (CI) recipients, appear to experience McGurk fusions more frequently compared to typical-hearing participants (Rosemann & Thiel, 2018; Stropahl & Debener, 2017). This suggests that the visual modality more influences people with hearing loss because of hearing loss.

The sound-induced flash illusion is another commonly used illusory stimuli for assessing audio-visual integration (Shams et al., 2000). In this illusion, two auditory tones are played in quick succession. The first tone is paired with a visual flash, while the second is not. Participants sometimes perceive an illusory visual flash with the second tone even though it is absent, which is the sound-induced illusory flash. Interestingly, adults with untreated hearing loss perceive the second flash less often than hearing aid users with similar hearing loss and age (Gieseler et al., 2018). This illusion may be driven by stronger weighting toward the auditory tone than the visual flash: participants with better hearing can encode the tone reliably, allowing for integration that leads to the illusion. Integration is less likely if the auditory signal is less reliable due to hearing loss (Geiseler et al. 2018), and the illusion is less frequent. This finding could be the other side of the same coin concerning the McGurk effect in age-related hearing loss, where both effects suggest a reweighting of sensory integration toward vision and away from hearing. In agreement, other studies have shown that visual distractors more easily influence people with hearing loss within auditory tasks than the effects of auditory distractors within visual tasks (Puschmann et al., 2014).

One issue is that these illusions may not reflect the audio-visual perception of natural, everyday communication. For example, performance on the McGurk task is not correlated with audio-visual speech perception in typical-hearing adults (Van Engen et al., 2017) or CI users (Butera et al., 2023). Despite this limitation, recent research supports that visual speech benefits age-related hearing loss. For example, a study using continuous, natural speech-in-noise shows that people with age-related hearing loss benefit more from seeing the talker, both in speech understanding and brain responses (Puschmann et al., 2019).

Are people with hearing loss better lip readers, which may explain visual benefits? Findings are mixed. One study found that people with hearing loss have similar speechreading performance for phonemes and sentences compared to typical hearing controls. Still, there is a slight improvement in perceiving isolated visual words (Tye-Murray et al., 2007). However, when audio-visual words were presented with individualized background babble for each participant, word perception was similar across both groups (Tye-Murray et al., 2007), making it unclear whether speechreading supported audio-visual speech perception in noise. These findings imply that lipreading ability does not emerge tacitly with hearing loss in aging. Alternatively, intentional speechreading training could be a promising way to improve speech-in-noise perception, and interest in speechreading has recently emerged. Bernstein and co-authors (2022a) highlight that poor lipreading ability in adults with and without hearing loss leaves a high ceiling for improvement, and a training study in younger typical-hearing adults shows that speech reading can improve audio-visual speech perception in noise (Bernstein et al., 2022b). A promising recent finding showed similar effects in middle-aged and older adults (Schmitt et al., 2023).

Cross-modal Plasticity

Neuroplastic changes to the brain may also influence visual abilities that occur after hearing loss. Research in deaf humans and animals clearly show that the auditory cortex – which is no longer receiving input from the ear – can be remapped or repurposed to support visual functions such as improved acuity in the visual periphery or motion detection (Lomber et al., 2010; Lambertz et al., 2005). This cross-modal form of plasticity appears to occur in noise-induced or age-related hearing loss that does not lead to total deafness, as has been shown in animal models (Schormans et al., 2017) and human EEG studies (Campbell & Sharma, 2014; Stropahl & Debener, 2017).

One concern with visual cross-modal plasticity is how it affects speech perception. For example, speech outcomes In CI users are worse for individuals who show evidence of cross-modal plasticity in brain recordings made before implantation (Lee et al., 2001). The explanation was that if auditory cortical neurons were recruited to perform visual functions during the period of deafness, they would not be available to support auditory speech perception during rehabilitation. Based on these findings there have been recommendations to limit visual language use if it encourages cross-modal plasticity. Adults with mild-to-moderate hearing loss also show worse speech-in-noise perception if they have larger brain responses to visual events (Campbell & Sharma, 2014), supporting this view. However, more recent research has argued against the view that cross-modal plasticity is detrimental. Cross-modal plasticity does not appear to interfere with the responsiveness of the auditory cortex to cochlear implantation in a cat model of hearing (Land et al., 2016), and in humans, some research shows that cross-modal activation of the auditory cortex after hearing restoration is related to better, not worse, speech outcomes (Anderson et al., 2017; Paul et al., 2022). These findings further motivate studies incorporating speechreading to improve speech-in-noise perception (Schmitt et al., 2023; Bernstein et al., 2022a). Monitoring visual brain responses during rehabilitation could reveal whether visual cross-modal plasticity accompanies these benefits.

Conclusions

The above studies highlight current evidence for altered visual processing in age-related hearing loss which is supported by (1) increased reliance on visual information or possibly enhanced audio-visual integration or (2) visual cross-modal remapping of the auditory cortex which could affect speech perception. However, some of this research is limited by how well findings generalize to day-to-day speech perception and the inconsistency of methods and measurements across research studies. Nonetheless, visual speech cues are beneficial for speech perception (especially in noise), could involve neuroplastic changes in the brain that better support vision, and can be used to inform more effective courses of rehabilitation for older adults with hearing difficulty.

References

- Anderson, C. A., Wiggins, I. M., Kitterick, P. T., & Hartley, D. E. (2017). Adaptive benefit of cross-modal plasticity following cochlear implantation in deaf adults. Proceedings of the National Academy of Sciences, 114(38), 10256-10261.

- Basharat, A., Thayanithy, A., & Barnett-Cowan, M. (2022). A Scoping Review of Audio-visual Integration Methodology: Screening for Auditory and Visual Impairment in Younger and Older Adults. Frontiers in Aging Neuroscience, 13, 772112.

- Bernstein, L. E., Jordan, N., Auer, E. T., & Eberhardt, S. P. (2022a). Lipreading: A review of its continuing importance for speech recognition with an acquired hearing loss and possibilities for effective training. American Journal of Audiology, 31(2), 453-469.

- Bernstein, L. E., Auer, E. T., & Eberhardt, S. P. (2022b). During lipreading training with sentence stimuli, feedback controls learning and generalization to audio-visual speech in noise. American Journal of Audiology, 31(1), 57-77.

- Butera, I. M., Stevenson, R. A., Gifford, R. H., & Wallace, M. T. (2023). Visually biased perception in cochlear implant users: a study of the McGurk and sound-induced flash illusions. Trends in Hearing, 27, 23312165221076681.

- Campbell, J., & Sharma, A. (2014). Cross-modal re-organization in adults with early stage hearing loss. PloS one, 9(2), e90594.

- Gieseler, A., Tahden, M. A., Thiel, C. M., & Colonius, H. (2018). Does hearing aid use affect audio-visual integration in mild hearing impairment?. Experimental brain research, 236, 1161-1179.

- Hallam, R. S., & Corney, R. (2014). Conversation tactics in persons with normal hearing and hearing-impairment. International journal of audiology, 53(3), 174-181.

- Lambertz, N., Gizewski, E. R., de Greiff, A., & Forsting, M. (2005). Cross-modal plasticity in deaf subjects dependent on the extent of hearing loss. Cognitive Brain Research, 25(3), 884-890.

- Land, R., Baumhoff, P., Tillein, J., Lomber, S. G., Hubka, P., & Kral, A. (2016). Cross-modal plasticity in higher-order auditory cortex of congenitally deaf cats does not limit auditory responsiveness to cochlear implants. Journal of Neuroscience, 36(23), 6175-6185.

- Lee, D. S., Lee, J. S., Oh, S. H., Kim, S. K., Kim, J. W., Chung, J. K., ... & Kim, C. S. (2001). Cross-modal plasticity and cochlear implants. Nature, 409(6817), 149-150.

- Lomber, S. G., Meredith, M. A., & Kral, A. (2010). Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nature neuroscience, 13(11), 1421-1427.

- McGurk, H., & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264(5588), 746-748.

- Paul, B. T., Bajin, M. D., Uzelac, M., Chen, J., Le, T., Lin, V., & Dimitrijevic, A. (2022). Evidence of visual crossmodal reorganization positively relates to speech outcomes in cochlear implant users. Scientific Reports, 12(1), 17749.

- Pepper, J. L., & Nuttall, H. E. (2023). Age-related changes to multisensory integration and audio-visual speech perception. Brain Sciences, 13(8), 1126.

- Puschmann, S., Sandmann, P., Bendixen, A., & Thiel, C. M. (2014). Age-related hearing loss increases cross-modal distractibility. Hearing Research, 316, 28-36.

- Puschmann, S., Daeglau, M., Stropahl, M., Mirkovic, B., Rosemann, S., Thiel, C. M., & Debener, S. (2019). Hearing-impaired listeners show increased audio-visual benefit when listening to speech in noise. Neuroimage, 196, 261-268.

- Rosemann, S., & Thiel, C. M. (2018). Audio-visual speech processing in age-related hearing loss: Stronger integration and increased frontal lobe recruitment. Neuroimage, 175, 425-437.

- Schmitt, R., Meyer, M., & Giroud, N. (2023). Improvements in naturalistic speech-in-noise comprehension in middle-aged and older adults after 3 weeks of computer-based speechreading training. npj Science of Learning, 8(1), 32.

- Schormans, A. L., Typlt, M., & Allman, B. L. (2017). Crossmodal plasticity in auditory, visual and multisensory cortical areas following noise-induced hearing loss in adulthood. Hearing Research, 343, 92-107.

- Shams, L., Kamitani, Y., & Shimojo, S. (2000). What you see is what you hear. Nature, 408(6814), 788-788.

- Stropahl, M., & Debener, S. (2017). Auditory cross-modal reorganization in cochlear implant users indicates audio-visual integration. NeuroImage: Clinical, 16, 514-523.

- Tye-Murray, N., Sommers, M. S., & Spehar, B. (2007). Audio-visual integration and lipreading abilities of older adults with normal and impaired hearing. Ear and hearing, 28(5), 656-668.

- Van Engen, K. J., Xie, Z., & Chandrasekaran, B. (2017). Audio-visual sentence recognition not predicted by susceptibility to the McGurk effect. Attention, Perception, & Psychophysics, 79, 396-403.