The Benefits of Linear Frequency Lowering for Music

Successful approaches for preserving harmonics in music programs can retain the same overall sound of music for people who are hard of hearing.

While frequency lowering has been useful for providing audibility of higher frequency consonants for people with significant high frequency hearing loss, it does not necessarily follow that this would also be useful for music.(1) For speech, frequency lowering to the healthier part of the cochlea can be very useful, and the reason this would work is because speech only has high frequency bands of energy that is frequency lowered. These broad bands of high frequency energy are found in all obstruents such as /s/, /f/, and it doesn’t really matter whether the broad band of energy of the sibilant begins at 4000 Hz or 3800 Hz.

Of course, too much frequency lowering and there can be some consonant confusion such as /s/ becoming confused with /sh/. In the lower frequencies, speech is dominated by the sonorant sounds such as vowels and nasals. Sonorants are made up of equally spaced harmonics and not broad bands of noise sources. Changing any of the harmonics will cause speech to sound “rough” and odd. Therefore, frequency lowering would not work for the lower frequencies of speech.

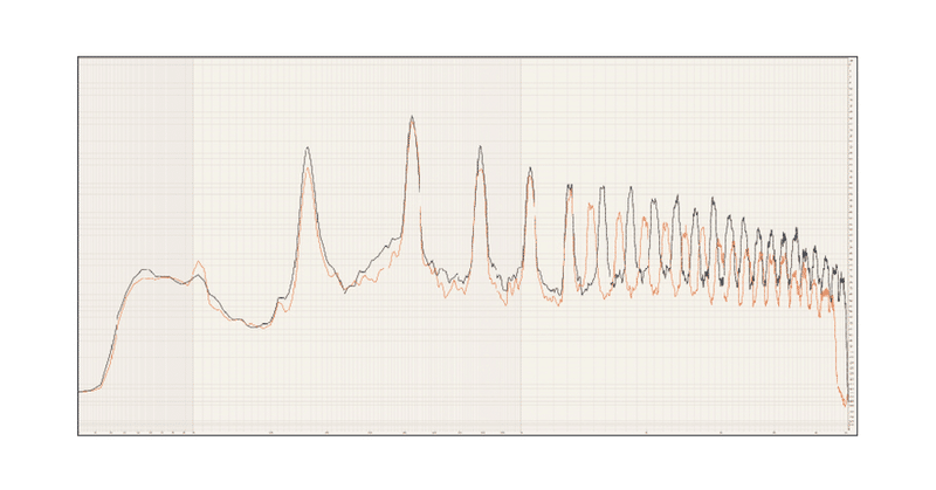

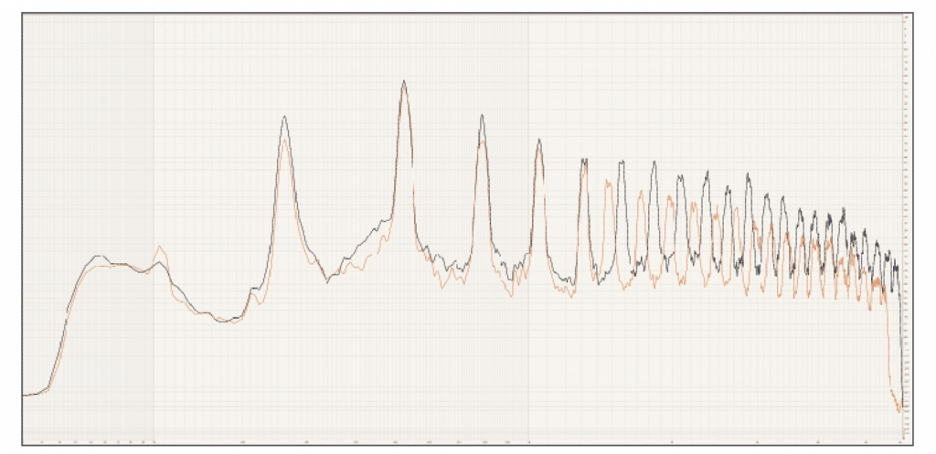

However, instrumental music is made up of harmonics only. This is true of the lower frequencies (like speech) and also the higher frequencies (unlike speech). Even the smallest lowering of an harmonic in music can cause dissonance.(2) Figure 1 shows a spectrum where all harmonics above 1500 Hz are lowered by only half of one semitone. The original sound of this violin is shown in red and the frequency lowered harmonics are black. QR codes for three audio files are also included: one of a violin playing a single note (A [440 Hz]), the second with full orchestral music, and the third with speech. All are presented in an A-B-A format with A being the original (red) and B being the slight high frequency lowering (black). Note that the third file for speech still sounds fine.

Addressing Cochlear Dead Zones

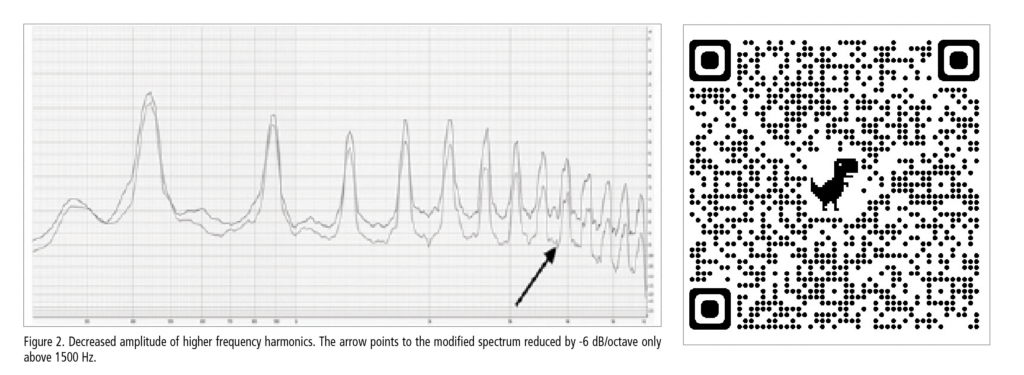

Frequency lowering for music, at least initially, does not appear to be a valid clinical strategy for a music program for a patient where cochlear dead regions want to be avoided. One clinical strategy, however, has been to decrease the amplitude of the higher frequency harmonics. In this scenario, the exact frequency of the harmonics has not been changed; just their amplitudes. The spectra are shown in Figure 2 with the arrow pointing to the modified spectrum which has been reduced by -6 dB/octave only above 1500 Hz. The associated audio file is also in an A-B-A format. (Scan the QR code next to Figure 2 to hear it.)

As pointed out by Francis Kuk (in The Widex Approach, below), it’s been found that some sound energy in the “cochlear dead region” may still be useful. It appears that the deleterious effects of amplified music in a frequency region with significant cochlear damage is level dependent, with lower levels being more acceptable. However, there is another strategy that may be useful for our patients to be able to listen to and/or play music, despite having significant cochlear damage, and still have good fidelity.

Frequency lowering can be linear or non-linear in its function. By “linear” we mean that the higher frequency harmonics (above a certain point) are all lowered equally by the same amount, and they are lowered in such a way that all of the higher frequency harmonics are mapped onto already existing lower frequency harmonics. When one does the math, this can be accomplished if the linear frequency lowering is exactly one octave: 4000 Hz is mapped onto 2000 Hz, 8000 Hz is mapped onto 4000 Hz, and so on.

Further reading: Hearing Aid Frequency Response Characteristics for Music

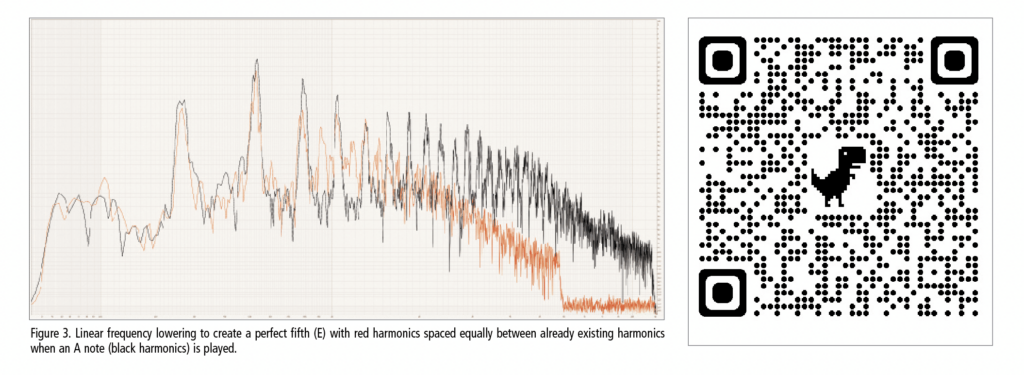

The frequency lowered harmonics are all mapped onto an already existing harmonic. But also, the odd numbered harmonics are mapped onto frequencies that will not sound dissonant. For ½ wavelength resonator musical instruments such as the guitar, piano, and violin, a perfect fifth is created (such as a C creating not only the C one octave lower, but also an intervening G). The perfect fifth was not what the composer had in mind, but it still sounds harmonically pleasing. For one-quarter wavelength resonator musical instruments such as the clarinet and trumpet, thirds are created which, again, was not what the composer had in mind, but would still sound harmonically pleasing. Figure 3 shows a spectrum of such a linear frequency lowering where a perfect fifth (E) is created (red harmonics that are spaced equally between already existing harmonics) when an A note (black harmonics) is played.

Hearing aids that use a non-linear frequency lowering scheme would not be able to be used for any form of music, just vocals.

Frequency Lowering Approaches

There are three major frequency lowering approaches currently in the hearing aid industry:

- FREQUENCY TRANSPOSITION: a linear decrease which can be viewed as a “cut/copy and paste” approach. An example is the Widex Audibility Extender.

- FREQUENCY TRANSLATION: a linear spectral envelope warping which can be viewed as a “copy and paste” approach. An example is the Starkey Spectral IQ.

- SOUND RECOVERY: a non-linear frequency compressing.

Despite using differing linear algorithms, frequency transposition by Widex (a cut/copy and paste approach) and frequency translation by Starkey (a copy and paste approach), if set to lower the frequency by exactly one octave, can be very useful in a music program for our hard of hearing patients. With all of the algorithms being developed in the hearing aid industry it may be insightful to sit back and learn more about how such design decisions are made.

Following are two overviews of the usefulness and development of these two linear frequency-lowering approaches. Dave Fabry, PhD, will be discussing the Starkey Spectral IQ linear frequency translation and Francis Kuk, PhD, will be discussing the Widex Audibility Extender linear frequency transposition.

Further reading: Addressing Music and Stress

The Starkey Approach

Starkey introduced its frequency-lowering strategy, originally called Spectral iQ, with X and Wi Series hearing aids in 2011.

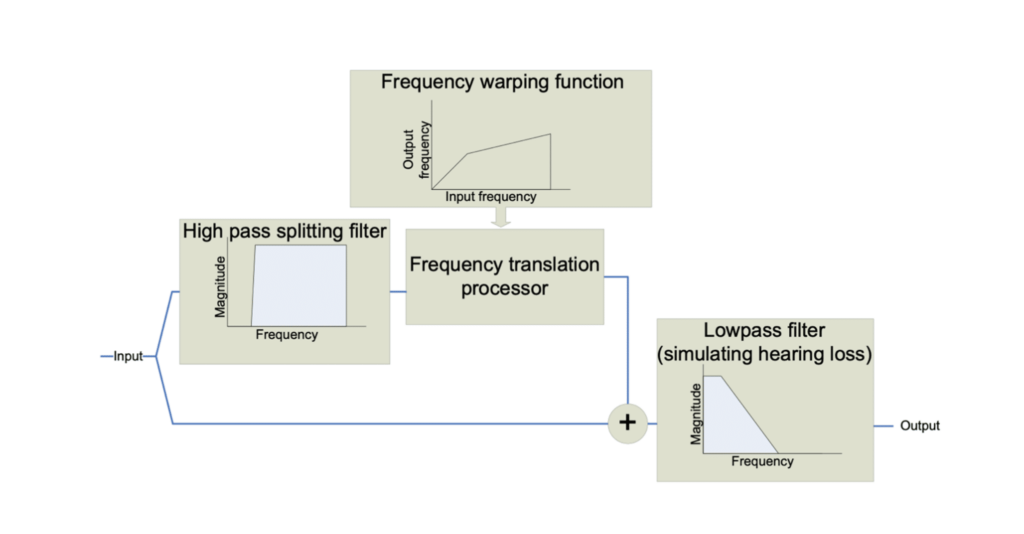

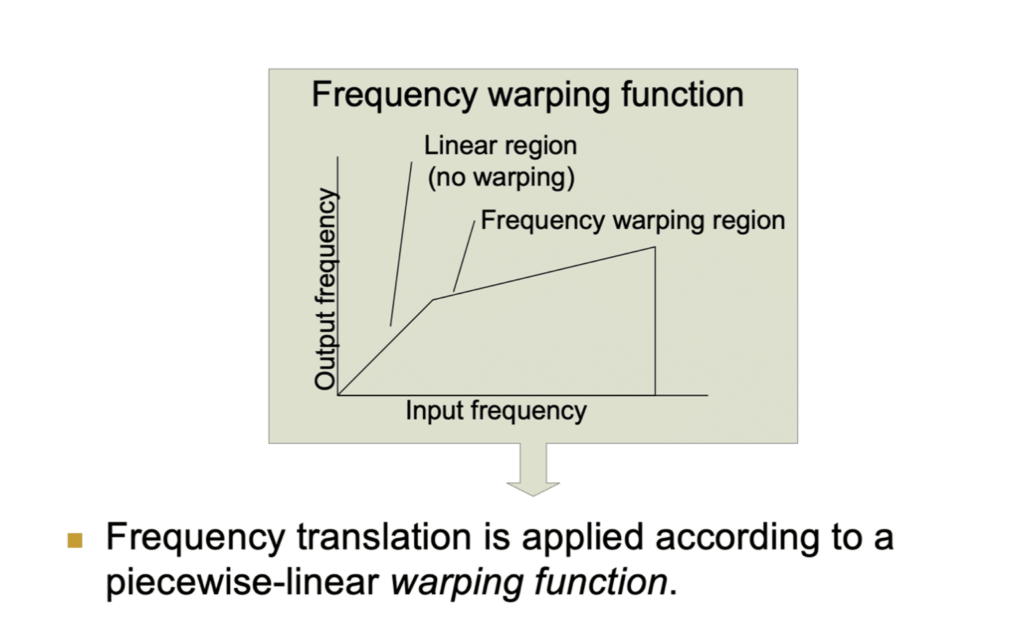

The Spectral iQ algorithm is intended for use in cases of severe-to-profound, high-frequency, sloping hearing losses. This novel technique for frequency lowering is technically described as Spectral Envelope Warping (SEW).(3)

The SEW algorithm operates by monitoring high-frequency regions for spectral peaks that are responsible for the recognition of high-frequency speech sounds like /s/ and /sh/. The algorithm characterizes the high-frequency spectral shape and dynamically recreates that spectral shape inside a lower frequency target region. The source region for analysis, target region for the newly created spectral cue, and gain applied to the newly generated cue are each prescribed by the programming software.

As opposed to nonlinear frequency compression approaches, which may affect harmonicity, the SEW algorithm uses linear prediction to design the resonant filter such that it does NOT affect harmonicity. This is especially important for music, as the components being lowered have maintained harmonic structure without affecting artifacts or distortion.

This signal processing strategy identifies high-frequency spectral cues and generates a complementary cue at a lower audible frequency in an effort to improve audibility of high-frequency sounds that were previously inaudible to the hearing aid user. Our expectation was that the generation of a new cue, rather than the lowering of sound and adjacent noise, minimizes negatively perceived changes in sound quality that are inherent to the process of introducing new information at frequencies that did not previously contain that information.

Further reading: Helping Cochlear Implant Recipients Improve Their Relationship with Music

The Widex Approach

Widex first introduced linear frequency transposition in 2006(4) as the Audibility Extender (AE). The algorithm “cuts” the output spectrum above a cutoff frequency (start frequency) and “pastes” it down one octave so the transposed higher frequency harmonics line up with the amplified, lower frequency harmonics. The original AE was conceived by the then chief engineer, Henning Andersen, an audiophile who places exceptional importance on preserving the natural quality of all sounds. Along with Carl Ludvigsen, Widex chief audiologist at the time, Henning made preservation of sound naturalness a cornerstone for all algorithmic development at Widex, even up to this day.

The AE was upgraded to its present form in 2017(5) in the Widex BEYOND hearing aid. It may be viewed as a “cut what you need and paste” approach in that the transposed octave (of sound) is pasted to the amplified output spectrum that extends beyond the start frequency by an individualized amount. The enhanced AE is designed based on Effortless Hearing design rationale. The new features reflect how cognitive factors are considered in the enhancement process so people of all cognitive backgrounds may receive the maximum benefit from its use. For those who want to delve into this further, the Hearing Review article “Audibility extender: using cognitive models for the design of a frequency lowering hearing aid” by Kuk et al⁵ provides more details on the design rationale and features.

Briefly, the current cognitive models would suggest that listeners find it more effortful to adapt to the new transposed sounds if they are more dissimilar from the original un-transposed sounds. This suggests that depending on the listeners, it may be more acceptable to start transposition with a fuller bandwidth (less difference from original sounds) first and gradually reduce the bandwidth (more difference from original sounds) to ease the transition.

Recent research has also cast doubt on how much of the “dead” high-frequency region may be amplified. Previously it was believed that all frequencies within a dead region (or above the start frequency) should be transposed and not amplified. In an internal study, we discovered that listeners with unaidable high-frequency loss actually preferred to have an output bandwidth that extends into the “dead” region. The amount of desirable extension varied among listeners, ranging from no extension to a full bandwidth. The majority of listeners, however, preferred extending the bandwidth by less than one octave, with more for music listening than for speech understanding.

These new findings suggest that the bandwidth of the amplified spectrum to which the transposed spectrum is added may be critical to the listener’s success with linear transposition. Thus, the current AE has a variable Output Frequency Range (OFR), which allows the clinician to specify the amplification bandwidth in the AE mode from as low as the start frequency to a full bandwidth of 10 kHz in one-third octave intervals. This allows one to individualize the optimal bandwidth based on the listener’s preference, experience with AE, or extent of “dead” region.

To further circumvent the potential occurrence of mis-alignment of the harmonics and to preserve the naturalness of the transposed sounds, a Harmonic Tracker is included in the current AE. This automatic algorithm tracks the harmonics of the transposed sounds to make sure that they are aligned perfectly with the original sounds to minimize echoic artifacts.

The AE also uses a Speech Detector to distinguish between voiced (in all channels) and unvoiced (only above 2000 Hz) sounds. When a voiced speech sound is detected, it is transposed at a lower AE gain level than when an unvoiced sound is detected, which is transposed at maximum AE gain. This would minimize any masking by the transposed voiced sound in the target region and add to the salience of the unvoiced transposed sounds. In general, the transposed sounds are amplified so they are approximately 5 to 10 dB above the hearing loss at that frequency.

To gradually ease the listeners into accepting the transposed sounds, the AE has an AE Gain Acclimatization feature. Once activated, the target AE gain is reduced by 6 dB at the time of fitting, only to automatically increase in ½ dB step per day so the full target AE gain is restored in 12 days after fitting. This minimizes the amount of initial mismatch and makes it easier (less effortful) for acceptance of the AE sounds. Training on the effective use of the feature is also emphasized.

In summary, Widex’s approach of using linear frequency transposition and the features in the Widex Audibility Extender, namely the Harmonic Tracker, Speech Detector, flexible Output Frequency Range and Start Frequency, and the Audibility Extender’s acclimatization algorithm, improve the likelihood of initial match between the transposed sounds and the phonological representations in the listener’s long-term memory. This minimizes the need for explicit processing and could benefit people of all cognitive backgrounds.

Original citation for this article: Chasin M.; Fabry D.; and Kuk F., The Benefits of Linear Frequency Lowering for Music. Hearing Review. 2024;31(1):08-13.

References

- Chasin M. Frequency Compression Is for Speech, Not Music. Hearing Review. 2016;23(6):12.

- Chasin M. Music and Hearing Aids. San Diego, Calif: Plural Publishing Group; 2022.

- Fitz K, Edwards B, and Baskent D. Frequency translation by high-frequency spectral envelope warping in hearing assistance devices. 2014. US Patent # US8761422B2.

- Kuk F, Korhonen P, Peeters H, Keenan D, Jessen A, and Andersen H. Linear frequency transposition: Extending the audibility of high frequency information. Hearing Review. 2006;13(10):42-48.

- Kuk F, Seper E, and Korhonen P. Audibility extender: using cognitive models for the design of a frequency lowering hearing aid. Hearing Review. 2017;24(10):26-34.