Brian C. J. Moore’s Great Influence on Hearing Aid Development

Like many students of audiology (and I remain one), I first became aware of Brian Moore’s work in his 1989 textbook Introduction to the Psychology of Hearing (3rd Edition)[1], a book that he wrote because he felt that no other adequate book existed at that time for a course that he was teaching. The earlier and subsequent editions of this book have been the cornerstone for many students of audiology and perception. During my work over the last 20 years bringing hearing aids to market, I have had many further encounters with the work of Brian Moore. He has rigorously examined many areas in the field of audition and so his work has had and continues to have a great influence on many design decisions and concepts that are used throughout the hearing aid industry.

[1] The most current version of this book is the 6th edition (Moore, 2012a)

To document all of his influence would take many review articles, given his prolific output. In his biography on the University of Cambridge Website, it mentions that he has written or edited 19 books and over 700 scientific papers and book chapters (University of Cambridge, 2019). His list of collaborators is wide and varied across geography and subject matter and could, in my opinion, be considered a “who’s who” of researchers in audition. So, in this short article, I would like to briefly highlight three areas of Moore’s research that I find especially interesting and influential in the development of hearing aid technology. My selection is probably very different than that of my colleagues across the industry and so this article reflects my personal observations of his work. The three areas that I would like to include are a discussion about hearing aid processing delay, amplitude compression systems, and finally frequency lowering technology.

Processing Delay

Currently, digital hearing aids have tremendous processing capabilities for acoustic signals regardless of type and origin. This processing includes (but is not limited to) the conversion from the analog to the digital domain, application of noise reduction, directional microphone patterns in many frequency bands, compression (amplification), feedback management, frequency lowering, etc., and then the conversion back from the digital domain to the analog domain. While complex signal processing can be applied rapidly by the hearing aid, each step in the signal processing chain takes time. When you add up all the processing time needed, this potentially creates a delay that is applied by the hearing aid, that can be more than 10 milliseconds (ms). When compared with an analog hearing aid which applies potentially less than 1 ms of delay, this is several orders of magnitude greater (Stone and Moore, 1999). With the wide use of open hearing aid fittings, there is also a potential for the signal in the hearing aid to take longer than the signal via the vent or the dome. There could consequently be a negative effect on perception, especially for those individuals who have relatively normal hearing in the lower frequencies, for example. With relatively long delays, i.e. greater than 20 ms, this could be an echo-like effect; whereas for delays of less than 10 ms, the effect would be a change to the timbre of the sound. The perceptual effects of processing delay were explored by Brian Moore and his long-term collaborator Michael Stone in a series of articles from 1999 to 2008 that appeared in the journal Ear and Hearing. These articles were all numbered (I – V) and so there was always a little bit of expectation generated for the next installment. The first four articles were by Stone and Moore alone, while the fifth article included the contributions of Katrin Meisenbacher and Ralph Derleth (Stone et al. 2008).

In Stone and Moore (1999), they outline the issues that are created by digital hearing aids when delay is created. Specifically, they wanted to explore the perception of a person’s own voice. Own voice perception occurs via three paths: 1) through the air via the hearing instrument venting and any leaks (as no fitting is ever completely occluded); 2) via the bones and cartilagenous structures of the head; and 3) via the air to the hearing aid microphone, through the subsequent processing of the hearing aid, and then out via the receiver. Alterations in the level and delay of the air conduction path could potentially have three effects. The first effect could be a disruption of voice production caused by conflicts between the auditory and the proprioceptive systems when self-monitoring. The second effect could be a disruption of audiovisual communication, given the fact that there could be a perceptible asynchrony between the auditory and the visual information channels. Finally, the third effect is the subjective disturbances that occur when, with increasing hearing loss, the amount of sound via air and bone conduction decreases and the hearing aid user relies more on what is heard through the hearing instrument. Using simulated hearing losses and hearing aid processing with normal hearing listeners, Stone and Moore (1999) concluded that delays between air conducted and bone conducted sound were likely to become disturbing when the delay exceeds 20 ms. They also concluded that longer delays might be tolerable for moderate to severe hearing losses. Stone and Moore (1999) concluded that these delays are smaller than the delays where the audiovisual integration of speech is affected. These results and conclusions showed that the delay must be considerable i.e. greater than 20 ms in order to be disruptive, however this was definitely not the end of the story, as we found out with the subsequent papers on this topic from Moore and his colleagues.

Stone and Moore (2002) looked at the effects of hearing aid processing (linear and compression) and delay on the production and perception of the hearing aid wearer’s own voice. The results showed that the disturbing effects of delays needed to exceed 15 ms in an acoustically “dry” environment and more that 20 ms in an acoustically “live” environment. Speech production was not affected until the delay was greater than 30 ms. So, Stone and Moore (2002) recommended that hearing aids should be fine with up to 15 ms of delay, however, longer delays of up to 30 ms would require that the user is warned about the potential for side effects. This could be increased by 4 ms in a more reverberant environment. They also pointed out that the application of compression may lead to a reduced tolerance of delay. This study gave us further insight into a common complaint amongst end users about their own voice that could potentially be made worse by longer delays.

Stone and Moore (2003) revisited the topic of delay by looking at the frequency-dependent delay on subjective and objective measures of speech production and perception. In this paper, they used hearing-impaired subjects with vowel consonant vowel (VCV) nonsense syllables as stimuli for an identification task and a prepared script for a reading task. The delays were varied across frequencies and an additional 2.5 ms was included to account for the conversion from the analog to the digital domains and back. The delays were 0, 4, 9, 15 and 24 ms. The delay was at its maximum at a variable frequency between 700 Hz and 1400 Hz, and it subsequently was reduced so that there was no added delay by 2200 Hz. Stone and Moore found that the added 9 ms of delay and greater was more disturbing than the 0 ms of added delay in the reading task. VCV identification decreased significantly when the delay was 15 ms or greater. What was interesting to me was that the delays, across frequencies, of 9 to 15 ms created significant changes in speech identification (place and manner information) and a subjective disturbance. A delay of 9 to 15 ms constant across frequencies would have a smaller effect, which is consistent with Stone and Moore’s earlier work. This 2003 paper was pivotal given the fact that it used hearing-impaired subjects as opposed to normally hearing individuals and that the delays used were varied across frequency. Stone and Moore (2003) raised the issue of comb filter like effects when the delayed low frequency signal from the hearing aid is combined with the natural signal that passes through the vent and creates a problem when the amplified sound and the sound entering through the vent are at similar levels. These comb filter effects can potentially disrupt the judgements of the timbre of the sounds. Combined with the previous work, the authors suggested that an overall delay of 8 to 10 ms was preferable to an across frequency delay of the same magnitude. This third paper was very thought provoking and created anticipation for the next installment that arrived two years later.

Stone and Moore (2005) looked at the effects of time delay on subjective disturbance and reading rates. The goal of this investigation was to examine the effects of the delay on the perception of their own voice by hearing-impaired subjects. They used a four-channel, fast-acting compression test hearing instrument (600, 1500, and 3000 Hz crossover frequencies) with processing in real time. The instrument was designed with four listening programs with an overall delay of 13, 21, 30, and 40 ms while the subjects all had varying degrees of sensorineural hearing loss. The delivery method of sound into the subjects’ ear canals depended upon the instruments that the subjects currently used and their ear canal geometry. The 25 subjects read aloud from scripts and were trained to recognize the effects of delay. While some could argue that training the subjects to recognize delay would bias this study, the authors felt that it was important that the subjects were aware of what they were listening for, to reduce inconsistent judgements. Stone and Moore (2005) found that with increasing delay, there was an increase in the disturbance created by the delay. They also found that individuals with a low-frequency hearing loss that exceeded 50 dB HL were much less disturbed by the delays than by subjects with a lesser degree of hearing loss. One very interesting finding for me was that disturbance ratings decreased with increasing experience over the course of an hour or so. This led the authors to suggest some type of acclimatization feature could be used in hearing aids for delay. The delay also required increasing effort, as reported by the subjects, but there was no change in their rate of speaking. This carefully designed study gave the reader more insight into the experiences of delay for hearing aid users and raised some issues too, about the implications of potentially controlling the delay by the clinician.

The final paper in the series estimated the limits of delay that would be acceptable for open canal fittings (Stone et al., 2008). The goal of open fittings as we all know is to reduce the negative experiences of the occlusion effect, that is a daily clinical challenge. The dilemma is that as you open up the fitting, you increase the amount of airborne sound that can naturally enter the ear canal. This airborne sound can mix with the amplified sound but with a slight delay. So, given this mix of signals, there could potentially be some acoustical effects such as the comb effect that was raised earlier. Stone et al. (2008) used both linear processing and multichannel compression (WDRC) in a series of three experiments with a simulated hearing aid system with delays varying between 1 ms and 15 ms. The use of 1 ms delay was below what was currently possible in hearing aids, while 15 ms was used as this was judged to be slightly above what was commercially available at that time. The signals were all presented via Sennheiser HD 580 headphones to participants who all had normal hearing[2]. The authors concluded that hearing aid delays of about 5 to 6 ms are likely to be acceptable for open fit hearing aids, however, if you read this paper in detail you are reminded again that the issue of delay is multifaceted. It must also be remembered that these conclusions were made based on normal hearing subjects. Today, when we think about the possible hearing losses that can be fit with open acoustics and the advancements that we have seen in feedback management, this Stone et al. (2008) investigation provided an important reference for hearing aids today.

[2] Moore used the Sennheiser HD580 headphones in many of his studies. These headphones are open and very comfortable and have received many great reviews. Moore also gives practical advice about how to best set up HiFi systems in his book Introduction to the Psychology of Hearing (e.g. Moore, 1989; Moore, 2012a).

These five studies by Moore and his colleagues, taken together as a whole, have given us a lot of fundamental information about the issue of the perceptual effects of processing delay. This body of work is still discussed amongst researchers, engineers, clinicians, and developers today. The rule of thumb is still that the least amount of delay that is created by the hearing instrument the better. Some researchers have suggested that when auditory and visual information is integrated that a delay to the auditory channel may in fact be beneficial and be unique to the individual, i.e. the natural synchronization between auditory and visual speech information may not be ideal (Ipser et al., 2017).

Ipser et al. (2017) went on to suggest that perhaps the clinician should have control over the hearing instrument delay, to be adjusted based on an individual’s internal time keeping systems. Recently, work has started to look at bimodal fittings and the effects of different processing delays between a cochlear implant and a hearing aid (e.g. Zirn et al., 2019). So, the issue of delay in hearing aids continues to be an area that is being investigated and Moore’s work is a basis for further research. I am quite sure that hearing aid processing delay will continue to be a topic in the future and I look forward to hearing more about it.

Amplitude Compression Systems

Most hearing aids currently on the market have some sort of amplitude compression, to compensate for sensorineural hearing loss (Launer et al., 2016). Compression needs to be applied so that soft sounds are audible and louder sounds are still perceived as loud but are judged to be comfortable. Moore et al. (1996) concluded that loudness recruitment results from the loss of fast-acting compressive nonlinearity that operates in the normal peripheral auditory system. Wide Dynamic Range Compression (WDRC) is used throughout the hearing aid industry in order to compensate for the disruptions in perception that are a result of the hearing impairment. There are many different applications of WDRC from the different hearing instrument manufacturers and each manufacturer spends a lot of effort to design, test, and market their own implementation. This is still an area of continued research and innovation, to try to answer the question of how can compression be applied more effectively for the individual and in what way. There are many parameters of compression that can be changed within an amplification system designed to address the impaired auditory system. To this day, there is no consensus with regards to the specifics of how compression should be used. However, there are some guiding principles that Moore and his colleagues have investigated.

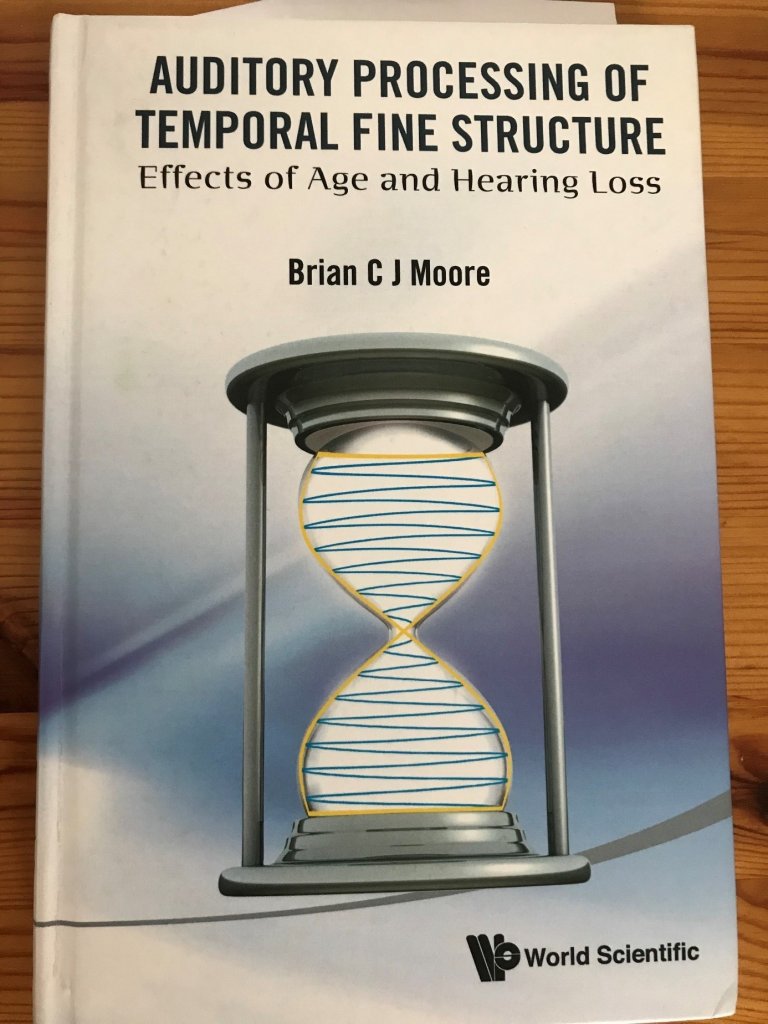

One area that Moore has explored extensively which affects how to approach hearing aid amplification is the perception of temporal fine structure (TFS). Well, what is it? Moore wrote a short book, published in 2014, to discuss this subject area.

If we look at the representation of a signal in Figure 1, which conveniently is the cover of Moore’s book (Moore, 2014), we can see the rapidly changing amplitude of the waveform over time (seen in blue) and this is known as TFS. There is another part of the waveform that changes in amplitude at a slower rate and this is the envelope (ENV) (seen in yellow). Moore states in the first chapter of his 2014 book that information is found in both the TFS and the ENV about sound. More and more research studies have shown that most hearing-impaired people lose their ability to extract information from the TFS (Hopkins & Moore, 2007; Moore, 2008). This is also apparent with age. As clients get older, they have an increasingly difficult time accessing TFS cues in speech and potentially rely more on information contained in the ENV (Souza & Kitch, 2001; Lunner et al., 2012). Moore (2008), suggested that an individual’s sensitivity to TFS may affect which compression speed gives the most benefit and that this could be assessed in order to determine the time constants that are best for an individual. Looking at this evidence, compression speed is very personal, depending upon the individual’s use of TFS and envelope cues. This question was examined further in a recent paper by Salorio-Corbetto et al. (2020) which also addressed the question of how many compression channels are needed in a hearing instrument, which I will now discuss.

Compression channels permit the use of different compression characteristics (time constants, compression ratios, level estimators, etc.) in specific frequency regions i.e. bands. This is to provide a different compressive function depending on the nature and degree of the hearing loss. So, compression can be applied differently in the mid frequencies than the high frequencies in the case of a sloping high-frequency loss. Today‘s multi-channel amplification is a result of sequential development to overcome problems but in the process has introduced compromises and many individual parameters (Moore et al., 2011). How many channels are optimum? My former colleague at Bernafon AG wrote an article published on Audiology Online whose title “More channels are better, right?” (O’Brien, 2002) which summarizes the commonly held belief. The answer is not so simple. There are many factors that are involved with determining the number of channels that are needed within a hearing aid and the debate about the ideal number of channels has been going on for many years, and probably will continue to do so. This year a publication from Moore’s Lab by Salorio-Corbetto et al. (2020) addressed this topic and the issue of compression speed. Using computer simulated hearing aids, they looked at both the number of channels and the compression speed on speech perception. The compression was either fast acting (10 ms attack, 100 ms release), or slow (50 ms attack, 3000 ms release) using 3, 6, 12, and 22 channels. The 20 subjects were all individuals with a sensorineural hearing loss. The authors found that there was no relationship between the number of compression channels and compression speed on the intelligibility of speech in two-talker and eight-talker babble noise. The authors conclude that it is potentially difficult to measure speech intelligibility in noise to make reliable decisions clinically regarding compression speed in the clinic. Subjective judgements and potentially cognitive measures should potentially guide these decisions.

Frequency Lowering Technology

Together with his colleagues, Brian Moore has also looked at other technology in hearing aids that is designed to improve the ability to access information from the acoustic signal when experiencing a sensorineural hearing loss. Moore has examined the subject of “dead regions” (DR) in the cochlear where the inner hair cells (IHC) are non-functioning, which results in what is known as off frequency listening. This happens when the basilar membrane vibration typically at a high frequency is not detected in the region where there are non-functioning IHC but is detected by IHC in another region that is normally sensitive to lower frequencies (Moore, 2001).

This work on DR has led to the development of the threshold equalizing noise (TEN) test to diagnose them (Moore et al., 2000). Later work also found that the presence of DR in the cochlea can also be predicted by the configuration of the audiogram (Aazh & Moore, 2007). With the description of DR in the cochlea, there was great interest in examining frequency lowering systems to address the perceptual consequences of DR in the cochlea with the goal of providing access to high frequency cues, especially for speech (Robinson et al., 2007). In Moore’s lab, Salorio-Corbetto et al. (2017) looked at consonant identification, word final /s,z/ detection, and other measures using a frequency lowering system. The 10 subjects all had a high frequency hearing loss and included individuals with DR in the cochlea. Four of the subjects had previous experience with frequency lowering technology. It was found the frequency lowering system improved the audibility of high frequency sounds for most of the subjects. The pattern of consonant confusions was mixed with some improvements while some new confusions were created. There was also an improved detection of word-final /s, z/. So, the results were mixed from this study, but were far from discouraging commitment to this technology by the hearing aid industry. In a later investigation, Salorio-Corbetto et al. (2019), looked at two different methods of applying frequency lowering – frequency transposition and frequency compression. Like the previous study, 10 subjects were used who had a high frequency hearing loss together with confirmed DR. It was found that both technologies could improve the audibility of high frequency sounds for most of the subjects. Salorio-Corbetto et al. (2019) concluded that frequency transposition might have more potential for benefits for hearing-impaired individuals after training and wearing experience. As was concluded in the earlier study (Salorio-Corbetto, 2017), it was suggested that frequency lowering systems provide potential benefits for reducing acoustic feedback due to the reduced need for high frequency gain. Salorio-Corbetto et al. (2019) also pointed out that this reduced need for gain can potentially prevent potential over amplification in an attempt to match fitting rationale targets. So, despite the fact that Akinseye et al. (2018) in their review article suggested that there was more work needed in order to accept that frequency lowering systems are beneficial to both adults and children, researchers together with Brian Moore in Cambridge found that there are other benefits that could be obtained from this approach to amplification. Research in the area of DR and the techniques that can be used to help improve audibility for high frequency information continue as hearing aids develop more and more processing power and capabilities.

Summary

In this article, I have summarized some areas of Brian Moore’s work that I feel have been important in hearing aid development. There are other areas where Moore has also contributed greatly to hearing aid development that I have not discussed, but should be noted, such as music (e.g. Moore, 2012b; Madsen and Moore, 2014), models of loudness perception (e.g. Moore et al., 1997) and fitting rationales (Moore et al., 2010) to name but three. Given the large number of research areas, I would argue that Moore’s work is so influential that even if a developer is unaware of the specific papers or book chapters that address some aspect of processing in a hearing aid, the improvements that he or she is working on are likely building on work that was either directly or indirectly influenced by Brian Moore. Perhaps I cannot prove this statement, but I believe it to be true. Also, as clinicians busy in our daily work, it is also interesting to pause and consider that the hearing aid in our hands is probably influenced by Moore’s work. Within our field, we are very grateful for the work that Brian Moore has done, and it is safe to say that his work has benefitted many individuals with hearing loss around the globe.

Acknowledgments

I would like to thank the editors for inviting me to write this article. I would also like to thank Frank Digeser (HNO-Klinik, Universitätsklinikum Erlangen, Germany) for our discussion about hearing aid processing delay.

References

Aazh, H., & Moore, B. C. (2007). Dead regions in the cochlea at 4 kHz in elderly adults: relation to absolute threshold, steepness of audiogram, and pure-tone average. Journal of the American Academy of Audiology, 18(2), 97-106.

Akinseye, G. A., Dickinson, A. M., & Munro, K. J. (2018). Is non-linear frequency compression amplification beneficial to adults and children with hearing loss? A systematic review. International Journal of Audiology, 57(4), 262-273.

Hopkins, K. & Moore, B.C.J. (2007). Moderate cochlear hearing loss leads to a reduced ability to use temporal fine structure information, Journal of the Acoustical Society of America. 122: 1055-1068.

Ipser, A., Agolli, V., Bajraktari, A., Al-Alawi, F., Djaafara, N., & Freeman, E. D. (2017). Sight and sound persistently out of synch: stable individual differences in audiovisual synchronisation revealed by implicit measures of lip-voice integration. Scientific Reports, 7 46413.

Launer, S. Zakis, J. A. & Moore, B.C.J. (2016). Hearing aid signal processing, Chapter 4 in Hearing Aids, G.R. Popelka, B.C.J. Moore, A.N. Popper & R.R. Fay, eds., Springer, New York.

Lunner T., Hietkamp, R.K., Andersen, M.R., Hopkins, K. & Moore, B.C.J. (2012). Effect of speech material on the benefit of temporal fine structure information in speech for young normal-hearing and older hearing-impaired participants, Ear and Hearing. 33: 377-388.

Madsen, S. M., & Moore, B. C. J. (2014). Music and hearing aids. Trends in Hearing, 18, 2331216514558271.

Moore, B.C.J. (1989). An Introduction to the Psychology of Hearing, (3rd ed.), Academic Press.

Moore, B. C. J. (2001). Dead regions in the cochlea: Diagnosis, perceptual consequences, and implications for the fitting of hearing aids. Trends in Amplification, 5(1), 1–34.

Moore, B.C.J. (2008). The choice of compression speed in hearing aids: Theoretical and practical considerations and the role of individual differences. Trends in Amplification, 12(2), 103-112.

Moore, B. C. J. (2012a). An introduction to the Psychology of Hearing (6th ed.), Bingley. UK: Emerald.

Moore, B.C.J. (2012b). Effects of bandwidth, compression speed, and gain at high frequencies on preferences for amplified music, Trends in Amplification, 16: 159-172.

Moore, B.C.J. (2014). Auditory Processing of Temporal Fine Structure: Effects of Age and Hearing Loss. World Scientific, Singapore.

Moore, B. C. J., Füllgrabe, C., & Stone, M. A. (2011). Determination of preferred parameters for multichannel compression using individually fitted simulated hearing aids and paired comparisons. Ear and Hearing, 32(5), 556-568.

Moore, B. C. J., Glasberg, B. R., & Baer, T. (1997). A model for the prediction of thresholds, loudness, and partial loudness. Journal of the Audio Engineering Society, 45(4), 224-240.

Moore, B. C. J., Glasberg, B. R., & Stone, M. A. (2010). Development of a new method for deriving initial fittings for hearing aids with multi-channel compression: CAMEQ2-HF. International Journal of Audiology, 49(3), 216–227.

Moore, B. C. J., Huss, M., Vickers, D. A., Glasberg, B. R., & Alcántara, J. I. (2000). A test for the diagnosis of dead regions in the cochlea. British Journal of Audiology, 34(4), 205-224.

Moore, B. C.J, Wojtczak, M., & Vickers, D. A. (1996). Effect of loudness recruitment on the perception of amplitude modulation. The Journal of the Acoustical Society of America, 100(1), 481-489.

O’Brien, A. (2002) More channels are better, right. Audiology Online. Retrieved from: https://www.audiologyonline.com/articles/more-channels-are-better-right-1183

Robinson, J., Baer, T. & Moore, B.C.J. (2007). Using transposition to improve consonant discrimination and detection for listeners with severe high-frequency hearing loss, International Journal of Audiology, 46: 293-308.

Salorio-Corbetto, M., Baer, T., & Moore, B. C. J. (2017). Evaluation of a frequency-lowering algorithm for adults with high-frequency hearing loss. Trends in Hearing, 21, 2331216517734455.

Salorio-Corbetto, M., Baer, T., & Moore, B. C. J. (2019). Comparison of frequency transposition and frequency compression for people with extensive dead regions in the cochlea. Trends in Hearing. 2331216518822206.

Salorio-Corbetto, M., Baer, T., Stone, M. A., & Moore, B. C. (2020). Effect of the number of amplitude-compression channels and compression speed on speech recognition by listeners with mild to moderate sensorineural hearing loss. The Journal of the Acoustical Society of America, 147(3), 1344-1358.

Souza, P, E. & Kitch, V. (2001) The contribution of amplitude envelope cues to sentence identification in young and aged listeners. Ear and Hearing, 22(4), 112-119.

Stone, M.A. &. Moore, B.C.J. (1999) Tolerable hearing-aid delays. I. Estimation of limits imposed by the auditory path alone using simulated hearing losses, Ear and Hearing, 20: 182-192.

Stone, M.A. &. Moore, B.C.J. (2002) Tolerable hearing-aid delays. II. Estimation of limits imposed during speech production, Ear and Hearing, 23: 325-338.

Stone, M.A. &. Moore, B.C.J. (2003) Tolerable hearing-aid delays. III. Effects on speech production and perception of across-frequency variation in delay, Ear and Hearing, 24: 175-183.

Stone, M.A. &. Moore, B.C.J. (2005) Tolerable hearing-aid delays: IV. Effects on subjective disturbance during speech production by hearing-impaired subjects, Ear and Hearing, 26: 225-235.

Stone, M.A. &. Moore, B.C.J, Meisenbacher, K., & Derleth, R.P. (2008) Tolerable hearing-aid delays. V. Estimation of limits for open canal fittings, Ear and Hearing, 29: 601-617.

University of Cambridge (2019). Department of Psychology: Professor Brian C.J. Moore. Retrieved from: https://www.psychol.cam.ac.uk/people/professor_brian_moore

Zirn, S., Angermeier, J., Arndt, S., Aschendorff, A., & Wesarg, T. (2019). Reducing the device delay mismatch can improve sound localization in bimodal cochlear implant/hearing-aid users. Trends in Hearing, 23, 2331216519843876.